At the start of the 2021 Formula 1 season, Ferrari had a mountain to climb.

Despite being one of the most well-funded and prestigious teams on the grid, they’d massively underperformed the previous year. Well, they were doing great, until… ahh, how shall I put it… a “potential irregularity” was discovered in how their engine was operating (others might say “They got caught cheating”).

After this came to light, regulations were changed, engine adjustments were made – and then Ferrari’s performance dropped massively. They went from being one of the top 3 teams, to being just a middling one.

This whole situation probably caused sleepless nights for a lot of people. But I’d wager none more than Carlos Sainz Jr.

You see, 2021 would be Sainz’s first season at Ferrari. He’d moved there from McLaren, a British team experiencing quite the opposite turn of fortune in 2020 – previously middling, McLaren were now on the ascendancy, and were in contention to take Ferrari’s spot as the number 3 team on the grid.

It seemed like Sainz had jumped ship… right onto one that was sinking.

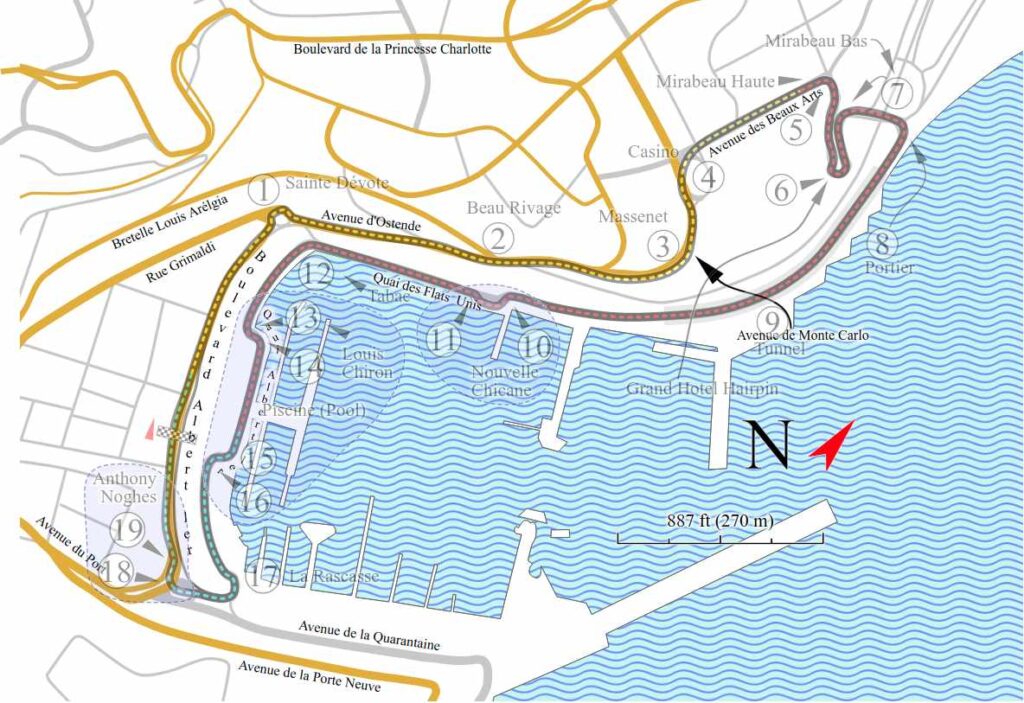

So you’d think, that if Sainz managed to finish in second place for Ferrari, he’d be ecstatic. This would be true of any race, but especially if it happened in Monaco, the most glamorous event of the calendar. Not only would it be a great personal achievement, it would suggest that perhaps Ferrari weren’t a sinking ship after all.

So why, on May 23 2021, after he did finish second at Monaco, did Sainz describe the result as “Bittersweet”?

This isn’t the first time that second place has been a source of sadness for Sainz. It happened previously in Monza 2020, when Sainz was still at McLaren. He was in second place and chasing down the leader, getting closer every lap… but unfortunately for him, he couldn’t get past.

You can hear the radio chatter between Sainz and his engineer in those final laps of that race here (his comments start at 1:19).

Not a happy chappy, is he?

To put that Monza race in perspective, finishing fifth or sixth would have been a good result in that McLaren car at that time. To finish second was an incredible result. If you’d offered him that before the race weekend, he’d have bitten your hand off. He should have been over the moon, but he was disappointed. Why?

Comparisons influence emotions

A study published back in 1995 might have the answer. The idea is that the happiness we get from something isn’t based on an objective assessment of that thing. It’s based on what we compare it to.

The researchers, Victoria Husted Medvec and Thomas Gilovich of Cornell, and Scott Madey of the University of Toledo, studied the happiness shown by medal winners of the 1992 Olympics. They had undergraduate students rate how happy each person looked in the medal ceremonies, and other footage from after the winners of the events were announced.

If happiness was based on an objective assessment of circumstances, you’d expect gold medallists to be happiest, then silver, then bronze, right? But that’s not what they found.

The gold medallists were happiness incarnate, as you’d expect. But weirdly, the bronze medallists were happier than the silver medallists. The reason for this might be what the athletes are comparing their results against.

The silver sadness effect

The researchers believe that when you win silver, you compare yourself to gold. You focus on the one single person in the whole entire world who beat you – rather than the billions of others over whom you just proved your superiority! This was clearly what was on Sainz’s mind after his second place finish at Monaco. As he said after the race:

“You know, the bittersweet feeling is still there because I had the pace to put it on pole or at least to win this weekend, and the fact that in the end we didn’t quite manage, is not great.”

The result was bittersweet because he’s changed his comparison point. Before the race fourth might have been a great stretch goal, and one he’d have been happy with. But due to a few mishaps, the fastest cars weren’t at the front where he expected them to be. Suddenly he found himself in second with a chance to win – and that became his new reference point. How he finished in relation to that reference point determined how he felt.

The bronze bliss effect

And why are the bronze winners apparently happier than the silvers?

Perhaps it’s because they compare themselves downwards – not up. They are just happy to be up there on the podium, as part of the winner’s group – knowing that they were just one place away from not getting any recognition at all.

This was clearly on the mind of Lando Norris of McLaren who finished third in Monaco, behind his former teammate Carlos Sainz. As Lando told Sky F1:

“It’s incredible… it’s a long race, especially with Perez the last few laps, that pressure of seeing him in my mirrors after every corner. It’s stressful… I don’t know what to say! I didn’t think I’d be here today. It’s always a dream to be on the podium here, so it’s extra special.”

As you see here, he’s comparing himself to fourth placed Perez – and he’s extremely happy with the result.

(It’s not the whole picture obviously)

Now of course there’s more to the story than purely finishing second vs third. And it’s not like every single person who finishes third is happier than the person in second. There are loads of other factors at play, like:

- Who else is competing. It’d be interesting to see if there’s a difference when competing against uber-champs – like if you’re swimming against someone like Michael Phelps, would you be happier with silver because you didn’t see yourself as really in the running for the gold? (or maybe elite athletes just don’t think that way).

- Your relative standing in the pack – if you’re a huge underdog who’d never dream of finishing 10th, never mind second, it might make a difference.

- Your history – if you’ve never had a podium/medal, then getting that under your belt will bring its own happiness

- How close the content was. If the best competitor was way out in front, and the second and third best grappled hard against each other with fourth place way behind them, then that second place might feel sweeter.

So you have to think that this silver sadness effect is just one part of a complex web of factors that influence how athletes feel after the content.

That’s if it exists at all, of course – we’ve only discussed one study here (although another study of judo fighters found the same thing). Still, the general idea that comparison affects our happiness (as well as things like our preferences and perceptions), is more broadly supported (whether you want to call it the comparison trap, the contrast effect bias, or whatever).

Anyway, I guess the practical application of this is, try to look down on other people as much as possible.

Just kidding!

The practical application I suppose would be something like deliberately expressing gratitude, or trying to become more aware of the good things and people you have in your life. I think that often, if we’re a little pissed off or annoyed, we’ve talked ourselves into that state by focusing on all the crap that went wrong.

So sometimes – not always, but sometimes – we might be able to talk ourselves out of it too, by changing our comparison point. Although, it might not work when you have more serious problems going on, or when things are super meaningful to you (like a racing career you’ve dedicated your whole entire life to).